A Little Hard Drive History and the Big Data Problem

Posted by Tyler Muth on November 2, 2011

Most of the “Big Data Problems” I see are related to performance. Most often these are long running reports or batch processes, but also include data loads. I see a lot of these as one of my primary roles is in leading Exadata “Proof of Value” (POV) engagements with customers. It’s my observation that over 90% of the time, the I/O throughput of the existing system is grossly under-sized. To be fair, the drives themselves in a SAN or NAS are not the bottleneck, it’s the 2x 2 Gbps or 2x 4 Gbps Fibre channel connection to the SAN more often than not. However, the metrics for hard drive performance and capacity over the last 30 or so years were a lot easier to find so that’s what this post is about.

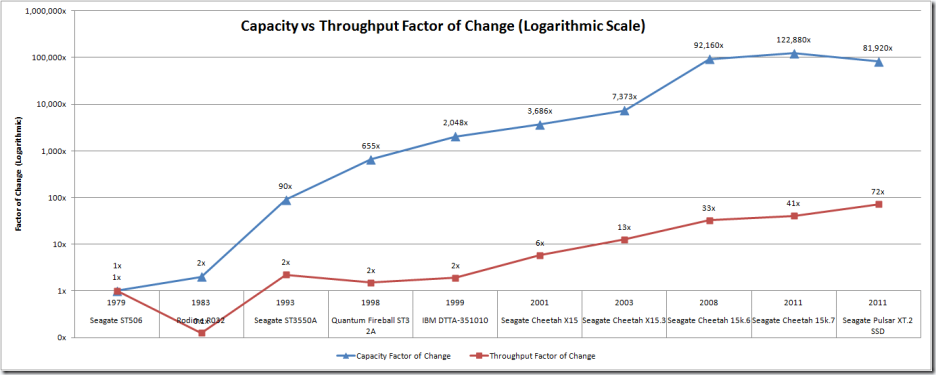

In short, our capacity to store data has far outpaced our ability to process that data. Here’s an example using “personal” class drives to illustrate the point. In the year 2000 I could by a Maxtor UDMA 20 GB hard drive connected locally via (P) ATA which provided roughly 11 MB/s of throughput (the drive might be limited to a bit less, but not much). Today, I can buy a 3 TB Seagate Barracuda XT ST32000641AS that can sustain 123 MB/s of throughput. So, lets say I need to scan (search) my whole drive. 21 years ago that would take 31 minutes. Today it would take 7 hours! What?!?! The time it takes to search the data I can store has increased by a factor of 14x. How can this be? What about Moore’s Law? Sorry, that only works for processors. Oh right, I meant Kryder’s Law. Kryder’s Law states that magnetic disk areal storage density doubles annually and has nothing to do with performance. Well, actually this phenomenon is half the problem since throughput isn’t doubling annually.

I did a little research and found some relevant data on seagate.com, tomshardware.com, and of course The Wayback Machine. Now on to the data and some graphs (click to enlarge graphs):

|

|

Seagate ST506 |

Rodime R032 |

Seagate ST3550A |

Quantum Fireball ST3 2A |

IBM DTTA-351010 |

Seagate Cheetah X15 |

Seagate Cheetah X15.3 |

Seagate Cheetah 15k.6 |

Seagate Cheetah 15k.7 |

Seagate Pulsar XT.2 SSD |

| Year | 1979 | 1983 | 1993 | 1998 | 1999 | 2001 | 2003 | 2008 | 2011 | 2011 |

| Capacity (MB) | 5 | 10 | 452 | 3276 | 10240 | 18432 | 36864 | 460800 | 614400 | 409600 |

| Capacity (GB) | 0.005 | 0.010 | 0.441 | 3.2 | 10 | 18 | 36 | 450 | 600 | 400 |

| Throughput (MB/s) | 5 | 0.6 | 11.1 | 7.6 | 9.5 | 29 | 63.6 | 164 | 204 | 360 |

| Capacity Factor of Change | 1x | 2x | 90x | 655x | 2,048x | 3,686x | 7,373x | 92,160x | 122,880x | 81,920x |

| Throughput Factor of Change | 1x | 0.1x | 2x | 2x | 2x | 6x | 13x | 33x | 41x | 72x |

| Capacity Percent Change | 0% | 100% | 8,940% | 65,436% | 204,700% | 368,540% | 737,180% | 9,215,900% | 12,287,900% | 8,191,900% |

| Throughput Percent Change | 0% | -88% | 122% | 52% | 90% | 480% | 1,172% | 3,180% | 3,980% | 7,100% |

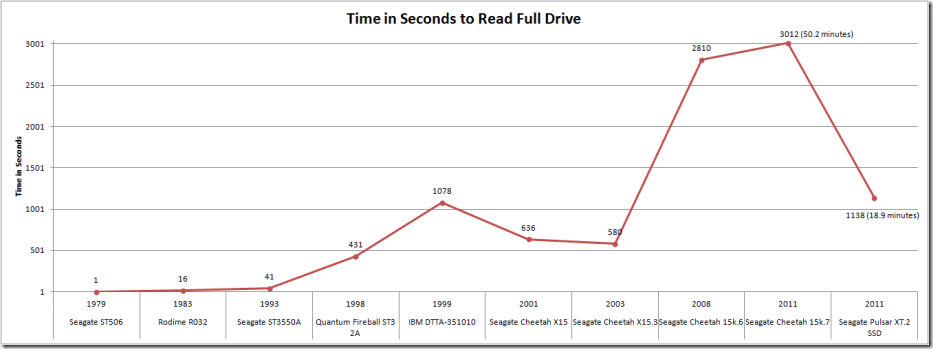

| Time in Seconds to Read Full Drive | 1 | 16 | 41 | 431 | 1078 | 636 | 580 | 2810 | 3012 | 1138 |

So, what does all of this mean? Well, to me it means if you’re architecting a data warehouse or even small data mart, make sure you focus on the storage. Over and over again I get drawn into discussions about the minutiae of chip speeds or whether Linux is faster than Solaris, yet when I ask about the storage the answer is almost universally “we’ll throw it on the SAN”. OK, how many HBAs and what speed? Is the SAN over-utilized already? etc, etc, etc.

So, how does this relate to Exadata? The central focus of Exadata is I/O throughput. By including processing power in the storage servers, Exadata has a fixed relationship between capacity and throughput. As you add capacity, you add throughput.

Tyler Muth said

Just a minor update: It looks like Seagate has an error in the spec sheet for the drive I used in the “personal” drive example. They used a small ‘b’ instead of a big ‘B’, so I read it as megaBITS /s instead of megaBYTES. The number seemed way off when I re-read my post so I confirmed the throughout of a comparable drive from Western Digital.

Noons said

Yeah, the whole HBA bottleneck is rarely if ever mentioned… I just had a perfect proof of its importance in a recent migration where, as I suspected, moving from a Clarion to a Symmetrix did nothing for our I/O bandwidth.

While moving from 1X4Gbps to 2X4Gbps resulted in a “magic” improvement of doubling our I/O bandwidth in one single, simple, cheap upgrade.

Now I still have to convince the cabling “experts” at our site that having a server on the 14th floor and a SAN on the 4th, with 150metres of FC cable in-between is likely not the best configuration for top IOPS…

But that’s a completely other matter, bundled with “I’m sure the problem is with the database” and “fc works at the speed of light, cable length cannot be the problem” and other such pearls of utter idiocy…

Tyler Muth said

Maybe they need a math lesson. 1X4Gbps FC = ~400 MB/s (simplex). Lets say they were using 15k RPM drives (the top drives can push 600 MB/s) in the Clarion, which are connected internally by a 4 Gbps interface (so the drive is limited to 400 MB/s). So, with only 1 of those drives they could saturate the 1X4Gbps FC. You should probably mirror those, so better make it 2 drives. Shocking that moving to the Symmetrix did nothing. How about 4x 8 Gbps FC? 3200 MB/s is nothing to sneeze at.

From a throughput perspective you’d be a lot better off with a single PCIe 2 x8 RAID card (like this one http://sn.im/2hvg3c) with 8 internal 15k SAS 6 Gbps drives. That would give you about 4 GB/s (gigabytes) of throughput with 1 GB of dedicated cache (though you have to buy the battery unit) for about $7,000. Happy to be proven wrong on my math here. IOPs are obviously a different story with only 8 drives (~2300 IOPs) and there are a number of other considerations like the card being the single point of failure, SAN should have a MUCH bigger cache, etc. Of course the SAN comes with a lot of baggage too like configuration details at many levels, multi-pathing software, it’s a complete black-box to troubleshoot performance, storage groups not talking to the DBAs and even when they do neither speaks the same language (technical language that is).

Yeah, in the last year of working with a lot of big customers (public sector US), I’ve never seen anything faster than 2x 4 Gbps FC for an aggregate of ~800 MB/s of throughput (simplex or one-way). Have to full scan a 500 GB table? Just to move the blocks it going to take (500*1024)/800 = 640 seconds, then the database has to filter those blocks. Need to load 500 GB of data, the same math applies. Need to gather stats with a 100% sample? Yep, 640 seconds minimum. Then you look at a 1/4 rack Exadata with 5.4 GB/s of disk bandwidth and 16 GB/s of Flash bandwidth (1.1 TB of Flash in a 1/4), and I know I’m bringing a missile to a knife fight. It’s not a fair fight, but I sure have fun with it 😉

Noons said

My next setup test is going to be with 2, 3 and 4X 4Gbps fc channels and check the linearity of bandwidth growth,

This is on Power6 and I can’t use native, I have to use SAN – IT “policies” created by idiots with less brains than a pinhead…

Anyways, one of the things I’ll be trying is scaling the HBAs through VIO, using an lpar on Power6. So far I’ve seen some excellent results with that setup by I doubt VIO will scale as linearly as a direct attach.

IBM, of course, claims it will scale, if it doesn’t then it must be “something wrong with the database”. When will they learn… 🙂

Thanks for the excellent info, great post!

Hemant K Chitale said

>“fc works at the speed of light, cable length cannot be the problem”

Yes, I’ve heard that a few times.

The concept of latency doesn’t seem to existant in the real world.

Hemant K Chitale said

Useful post. Nobody, but nobody, ever thinks that the HBA and Interconnect are a problem.

We look at the I/O throughput of FullTableScans and look at Disk I/O statistics and wonder why the system is slow. We forget what’s between the disks and the server.

Tyler Muth said

Just as a reference point, here’s a link to some performance metrics for the new Oracle Database Appliance: http://sn.im/oda-metrics . ~$50k in hardware including 4 TB of usable storage and you get 3000 MB/s of I/O throughput. Not too bad in the context of this discussion. It has some obvious limitations but is a good example of a purpose-built solution that delivers a lot of performance where it counts.

Going back to my previous comment, 4x 8Gbps FC in conjunction with enough drives on the SAN would match this and could far exceed it in IOPS since you can add more disks and should have a much larger cache. However Noons, if you were to take a picture of your P6 next to your Symmetrix, then compare it to a picture of the little 4U ODA and ask people which system looks faster (more throughput, since there are many definitions of fast), what do you think the answer would be 95% of the time? 🙂

Hope this didn’t sound too much like an ODA sales pitch, just using it as an interesting reference point.

Noons said

No argument here. I wish I could convince the powers that be to get me 4X8Gbps HBAs. And if it depended on me, we’d be on ODA now: I hate this “one SAN for everything” idiotic strategy I’m forced to follow.

Unfortunately when I had a chance to change things, I got no support from the local Oracle sales pitch other than “just outsource the expensive dba”. It’s gonna take ages now to change the status quo, given that everyone has just drank the EMC kool-aid that a single Symmetrix can do everything and brew coffee as well.

Ah well, what can I say other than: “never underestimate the capacity of Oracle Australia to shoot themselves in the foot”…

😉

State of Data #74 « Dr Data's Blog said

[…] #big_data – ‘Big Data’ is essentially about solving performance problems #Data_Science – Closer look at Oracle Big Data […]

database services said

Here is nice conclustion, we never thought in this direction. When system is hit by performance issue. Other all things are taken into considration are made detail anylysis on it. This page will help us to look into performance issues into different directions.

commercial industrial roofing said

The capacities of 8, 12, roofing baltimore maryland 16,

and 20 quarts ensure you always have the right size pot for stews, soups,

chili, and more. Hoffritz 4 Piece Nesting Aluminum Stockpot Set with Lids

– This Hoffritz 4 Piece Nesting Aluminum Stockpot Set is perfect for preparing big family dinners, holiday

meals, and large cookouts. The capacities of 8, 12,

16, and 20 quarts ensure you always have the

right size pot for stews, soups, chili, and more.